On 21st February 2011, Microsoft announced that it would release a beta version of  a Kinect SDK for Windows in spring 2011. According to Microsoft Research, the SDK is

a Kinect SDK for Windows in spring 2011. According to Microsoft Research, the SDK is

“…intended for non-commercial use to enable experimentation in the world of natural user interface experiences”

There has been a lot of buzz about Kinect in the open source community with many demo apps being built showing what is possible with this new way of interaction. If these early prototypes and apps released by enthusiasts are anything to go by, we have a lot to look forward to once the official SDK becomes available.

I’m pleased to hear that the intention is for the SDK to be made available to “researchers and enthusiasts”, as there is much work to be done to find out the best ways that Kinect can be put to use, especially for non-gaming applications. However I am concerned that the restrictions on the license does mean that commercial organisations who wish to invest in Kinect do not have a way of delivering applications to the market place. I suspect that this just reflects the state of this form of natural user interaction – despite the enthusiasm Kinect is very much still in its infancy for non-gaming applications and research needs

to be carried out to explore how and where it can be best used.

Whilst we wait for the official SDK to be released, and given the restrictions for its use, I spent some time recently looking at what open source APIs are available now and trying them out to see how easy it is to start coding for the Kinect on the PC. The following is my review of the main APIs currently available.

Code Laboratories (CL) NUI Platform

The CL NUI Platform is a good place to start for anyone wanting to experiment with Kinect and who wants to become acquainted with the hardware of the device. This platform was created by “AlexP” who was responsible for first hacking the Kinect back in

November 2010. The platform provides a .NET API and a couple of sample applications written in WPF/C#.

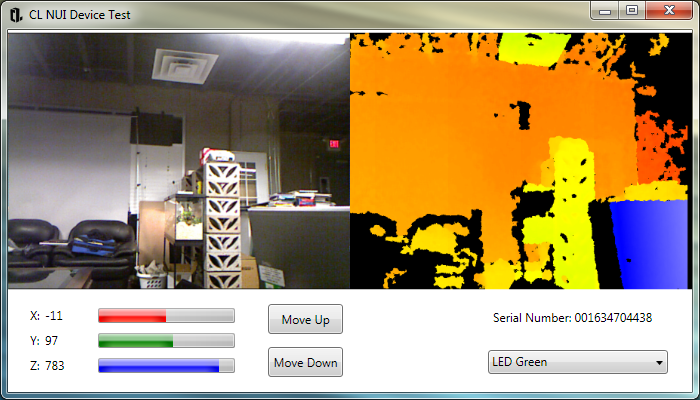

CL NUI Platform sample application showing interaction with Kinect hardware

The API allows developers to interact directly with the Kinect hardware, including the RGB camera, depth sensor, accelerometer, motor and LED. Currently, it does not allow use of the audio hardware on the device, however the Code Laboratories website says that this is in the pipeline.

What you will quickly discover if you use this platform is that it is not possible to interact with the advanced features of Kinect such as gesture control. This is because these capabilities are actually provided by the software API and are not baked directly into the hardware. However, the platform is a great introduction to the facilities offered by the hardware and it is possible to get the API up and running very quickly without too much fuss.

APIs are designed to provide a level of abstraction to make it easier to code for the device. One interesting feature of the CL NUI platform is that it does provide access to the raw data returned from both the RGB and 3D depth of field sensors (more on these later). If the official SDK does not support access at this low level, this platform would allow developers to use the depth data to do some quite interesting things. For example it would be possible to build up 3D representations of captured images, or measure the distance of objects in 3 dimensional space. There are already some interesting examples which have been put together which do just this:

Key features of the Kinect hardware

Kinect consists of a number of bits of hardware which together enable the device to be used for gesture control and other applications:

- 3D depth of field sensor and infra-red laser projector

- RGB Camera

- Motor

- Accelerometer

- 3D Audio hardware

The essential hardware is the 3D depth of field sensor and IR laser. The depth of field

sensor together with an IR grid is able to detect the distance of points in 3 dimensional space from the sensor. I’ll save a in depth discussion on this to others but in essence this produces a image depth map in which each pixel represents its distance from the sensing device. You can see an example in which distance is represented by colour in the right hand image in the CL NUI Device Test sample application (screenshot above). What this gives you when using the CL NUI Platform is basically a raw feed of depth of field data from the sensor, relating to each pixel in the RGB image.

To combine the raw depth of field and image data and use these for object tracking and gesture control, these images need to be processed by a software middleware component which can translate the raw data into meaningful information that can enable user interaction. This middleware is usually provided as part of the API or SDK, or in some cases by a related installation required by the API.

This leads me to my next API review…

PrimeSense NITE Middleware

PrimeSense developed the 3D depth of field sensor and IR technology which is used in Kinect. In January 2011, PrimeSense released their own API to enable developers to make use of this part of the Kinect hardware.

The PrimeSense NITE Middleware (NITE = Natural Interaction Technology for End-user) consists of an API and visual processing software that uses the depth data provided by the PrimeSense hardware on the Kinect and translates this into meaningful data to perform user identification, features detection, gesture control and user control acquisition.

The NITE Middleware processes the raw depth and image data to support more advanced user interaction and control scenarios. The following are the key features provided by the visual processing algorithms in the PrimeSense NITE Middleware. You can find out more about these features by installing the Middleware and browsing the documentation which is included.

Session Management

A user starts a “session” with the device by performing a special gesture called a “focus gesture”, such as a “click” or “wave”. This allows the user performing the gesture to gain control and start a session with the device. In this mode that user is controlling the device, and until they release it, no one else can gain control. The API supports detection of the focus gesture and tracking of the user who has control of the device.

Gesture control

Gesture control includes detection of the focus gesture as well as hand tracking.

When the API detects a focus gesture, this data can be reported it to an application. The API supports a number of focus gesture types including: Click, Wave, Swipe Left, Swipe Right etc.

In addition, the middleware can track the controlling hand which performed the focus gesture and measures its position in space relative to the sensor.

User segmentation

The middleware can identify and track individual users in a scene and each user is assigned a unique identifier and a label map which gives the user ID for each pixel in the image. This allows applications to track the outline of users in an image and in turn is used by the skeleton tracking algorithm.

Skelton tracking

This feature of the middleware is interesting as it allows an application to detect the positions and orientations of skeleton joints for each user in a scene. The image below is taken from the “NITE Algorithms” documentation which shows the joints which are tracked by the algorithm.

Installing the PrimeSense NITE Middleware can be a little daunting as a number of components need to be installed in the correct order alongside the middleware itself.

PrimeSense provide a download page listing the required components. Unfortunately I found that the guidance provided on the PrimeSense website is not very detailed, however the best guide I have come across is an excellent blog post written by Vangod Pterneas which describes the process in detail and has some excellent troubleshooting advice.

So far, despite following the advice in the post, I have had only limited success in using the

NITE Middleware and have not been able to get the NITE middleware sample applications to connect. I will continue trying and will report progress in further posts.

Microsoft Kinect SDK and future NI devices…

PrimeSense have built the NITE algorithms based on a set up cross platform, multi-language standards defined by OpenNI. This organisation was set up by PrimeSense to promote a standard API and compatibility between Natural Interaction (NI) devices. The aim is that a single API should be applicable for different NI devices.

In my personal opinion it is likely that the aspects of the Microsoft SDK which use the

PrimeSense depth sensor will be based on the PrimeSense NITE middleware. Familiarising yourself now with the PrimeSense API will therefore be a good introduction to the broad features which are likely to be provided by the official Microsoft Kinect SDK. I am particularly interested to see whether Microsoft choose to base the SDK on the standards laid down by OpenNI, or whether they choose to implement their own standards. Given that PrimeSense are likely to be releasing their own sensor manufactured by ASUS, I wonder whether there are any conditions in the licensing of the hardware to Microsoft that dictate that the API is based on the OpenNI standards . This would ensure that developers can build apps which work on a range of future devices and are not hard coded to work on Kinect only.

Based on my limited success using the PrimeSense NITE API, I can’t help thinking that it is very early days for development with Kinect and I only hope that the official Kinect SDK will simplify this process for developers. This will leave developers able to focus on thinking of innovative ways to use the device rather than dealing with installation and technical idiosyncrasies.